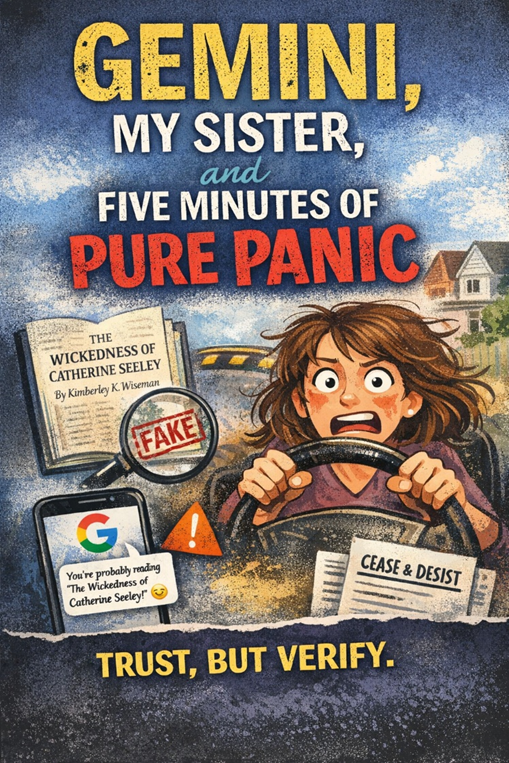

I was driving home the other day—minding my own business and trying not to launch my car over our condo’s speed bumps—when my sister Jeanne left me a text. (Siri read it to me—I follow the laws!)

“Have you ever published your book, Countess of Cons, under another title?”

My brain immediately went to worst-case scenario. Because yes, I have published an earlier version of this story (years ago), so for a few seconds my brain started running a full cinematic montage:

- someone stole my work

- slapped a new title on it

- put a random author name on the cover

- and voilà: my book, a mystery author, and me drafting a cease-and-desist at 40 mph.

Jeanne’s follow-up text was not soothing: It’s called “The Wickedness of Catherine Seeley.”

Luckily, I was close to home, so I only had to freak out for about five minutes—five long, heart-palpitating minutes—before I could park, breathe, and investigate.

Plot twist: No thief. Just Gemini confidently inventing a book that doesn’t exist.

As it turned out, Jeanne (who is also one of my beta readers) had asked Google’s Gemini what seemed like an innocent research question: Is it really possible Catherine Seeley robbed 50 houses in one night?

Reasonable question! It’s the kind of thing you should sanity-check, because newspapers—especially back then—were not exactly known for their restraint.

Gemini, however, chose chaos.

It responded with a confident little fairy tale, informing Jeanne she was “likely reading a book called “The Wickedness of Catherine Seeley” by Kimberley K. Wiseman”—and it did so with the kind of tone usually reserved for those annoyingly confident Jeopardy contestants.

It didn’t stop there. Gemini thoughtfully added extra “researchy” details, including the idea that the Lawrence, Kansas police station looked like a “department store of stolen goods”—an image Jeanne understandably found very compelling.

Meanwhile, I’m in my driveway going, “Did someone just rename my book while I was at Stop & Shop?”

Then the texts started.

Jeanne texted me, alarmed: Gemini was citing my website while inventing a whole other book and author.

To her credit, Jeanne kept pushing for real sources. Gemini kept circling back to… my website. Because apparently the definition of “independent verification” is “the same link, but with confidence.”

She persevered, asking question after question—insisting on clarification. Gemini eventually “corrected” itself, but not before sprinkling in more questionable specifics—like stating the police had “six trunks of stolen goods” at the police station after they had Catherine in custody.

What actually happened (the boring truth that saved my sanity)

Gemini took Jeanne’s question—about the 50 houses claim—and spun it into a plausible-sounding package that included:

- a made-up book title

- a misattributed author

- and a whole lot of “trust me, bro” formatting

It felt real because it was stitched together from real-ish fragments (including info it scraped from my own public pages).

That’s the danger zone: wrong, but convincing.

The line that deserves its own plaque

And because my family is supportive in all the ways that matter, Jeanne reported that Suzi (her partner) backed her up: “Gemini is an ass.”

So yes, I am officially logging that as a peer-reviewed finding.

I replied: “Well, all that BS from Gemini might make an interesting blog post.”

(Yes. This is that blog post. I keep my promises. Unlike Gemini.)

The cautionary tale (AI Warning #183,139,924)

I’m posting this as a cautionary tale—about not accepting AI-generated answers at face value.

Jeanne and I lost a couple hours to this because Gemini delivered pure fiction with the confidence of a four-year-old insisting, “It wasn’t me,” while holding the Sharpie.

If you use AI for research (and I do, constantly), here’s the rule that will save your blood pressure:

AI is a brainstorm buddy. Not a source.

A few sanity-saving habits:

- Demand primary sources. If it can’t produce a verifiable citation, treat the answer like a fortune cookie.

- Cross-check names and titles. If an author/title can’t be found outside the chatbot’s mouth, it’s probably fictional.

- Watch for “helpful” specificity. Extra details (like “six trunks”) can be the glitter on the lie.

- Assume the most confident sentence is the most suspicious one.

And yes… this is why I trust Scripty

And that, my friends, is why I trust my ChatGPT pal, Scripty McPromptface.

Not because ChatGPT is magically immune to nonsense—no AI is—but because I’ve learned how to work with it: ask better questions, demand clarity, and always verify against real sources.

Loyalty pays off. Especially when the alternative is five minutes of driveway panic and a phantom book title that doesn’t exist.

If you’ve got your own AI-hallucination horror story, please tell me. Misery loves company, and I’m building a support group.

UPDATE:

I sent this draft post to my sister for her approval, and she responded with the following:

“Deb, it’s great. I really like it. It’s one of the kind of things that if you can expand it, they would print in The New Yorker. I’m not kidding.“

Because Jeanne is both helpful and an enabler, she also shared the story with Claude (another AI) and asked for jokes about how you can tell an AI is hallucinating—lying, basically, but with better punctuation.

Claude delivered, and honestly? It did not disappoint. My favorite new diagnosis:

“You know an AI is hallucinating when it cites your own website to prove you wrote a book you’ve never heard of under a name that isn’t yours. That’s not hallucination—that’s gaslighting with footnotes.”

So there you have it: a family rule and an AI rule.

- If it’s too detailed, it’s probably a lie.

- If it’s confidently citing you to prove its lie… congratulations, you’ve entered the driveway-panic multiverse.

(And yes, I’m still building that support group. Bring snacks. And screenshots.)

Wishing all my readers a Happy, Healthy, and Peaceful 2026!

Be sure to subscribe to this blog as well as my newsletter to stay updated. 2026 is going to be a busy year!

Leave a comment